How to Convert a Spark Dataframe into json file and make key from column name and column value both for different different columns

Hi Friends,

Today I would Like to show the use case with a Spark DataFrame and Converting it to Json File.

If We say to Convert DataFrame to Json, it's very easy with using DF.toJSON but, it creates json as column name as key and column's value as value, but what if, for some column, I want to have key from column name and for another's key, make the key from column's value instead of column name, and value for all key will be column's value only.

Here I'll give steps with example data, that how we can reshape the DataFrame with pivot and create json as expected.

Spark DataFrame to convert into Json file. Below is the input and expected output format.

Input DataFrame :

Output Json :

{"Name":"Anamika","Age":"30","City":"Delhi","Delhi":{"First":"ABC"}}

{"Name":"Ananya","Age":"25","City":"Delhi","Delhi":{"Second":"XYZ"}}

{"Name":"Pritam","Age":"12","City":"U.P.","U.P.":{"Third":"MNO"}}

In Above output, if you see carefully, for last key, it's generated from Column's value instead of Column's Name like another keys.

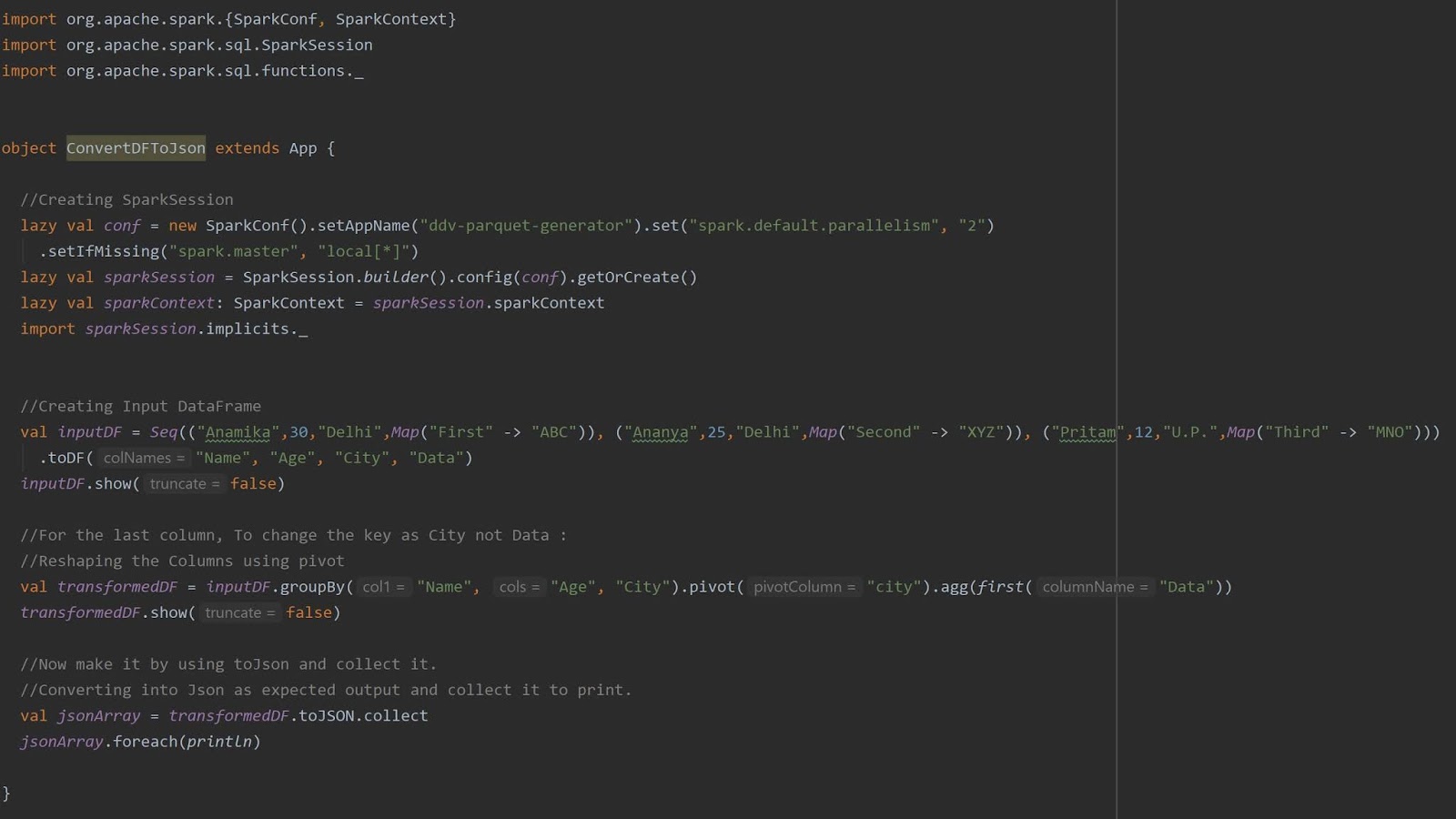

Below the code with Input DataFrame to Achieve the same :

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.functions._

object ConvertDFToJson extends App {

//Creating SparkSession

lazy val conf = new SparkConf().setAppName("ddv-parquet-generator").set("spark.default.parallelism", "2")

.setIfMissing("spark.master", "local[*]")

lazy val sparkSession = SparkSession.builder().config(conf).getOrCreate()

lazy val sparkContext: SparkContext = sparkSession.sparkContext

import sparkSession.implicits._

//Creating Input DataFrame

val inputDF = Seq(("Anamika",30,"Delhi",Map("First" -> "ABC")), ("Ananya",25,"Delhi",Map("Second" -> "XYZ")), ("Pritam",12,"U.P.",Map("Third" -> "MNO")))

.toDF("Name", "Age", "City", "Data")

inputDF.show(false)

//For the last column, To change the key as City not Data :

//Reshaping the Columns using pivot

val transformedDF = inputDF.groupBy("Name", "Age", "City").pivot("city").agg(first("Data"))

transformedDF.show(false)

//Now make it by using toJson and collect it.

//Converting into Json as expected output and collect it to print.

val jsonArray = transformedDF.toJSON.collect

jsonArray.foreach(println)

}

Output :

I Hope, This Post was helpful, please do like, comment and share.

Thank You !

Today I would Like to show the use case with a Spark DataFrame and Converting it to Json File.

If We say to Convert DataFrame to Json, it's very easy with using DF.toJSON but, it creates json as column name as key and column's value as value, but what if, for some column, I want to have key from column name and for another's key, make the key from column's value instead of column name, and value for all key will be column's value only.

Here I'll give steps with example data, that how we can reshape the DataFrame with pivot and create json as expected.

Spark DataFrame to convert into Json file. Below is the input and expected output format.

Input DataFrame :

Output Json :

{"Name":"Anamika","Age":"30","City":"Delhi","Delhi":{"First":"ABC"}}

{"Name":"Ananya","Age":"25","City":"Delhi","Delhi":{"Second":"XYZ"}}

{"Name":"Pritam","Age":"12","City":"U.P.","U.P.":{"Third":"MNO"}}

In Above output, if you see carefully, for last key, it's generated from Column's value instead of Column's Name like another keys.

Below the code with Input DataFrame to Achieve the same :

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.functions._

object ConvertDFToJson extends App {

//Creating SparkSession

lazy val conf = new SparkConf().setAppName("ddv-parquet-generator").set("spark.default.parallelism", "2")

.setIfMissing("spark.master", "local[*]")

lazy val sparkSession = SparkSession.builder().config(conf).getOrCreate()

lazy val sparkContext: SparkContext = sparkSession.sparkContext

import sparkSession.implicits._

//Creating Input DataFrame

val inputDF = Seq(("Anamika",30,"Delhi",Map("First" -> "ABC")), ("Ananya",25,"Delhi",Map("Second" -> "XYZ")), ("Pritam",12,"U.P.",Map("Third" -> "MNO")))

.toDF("Name", "Age", "City", "Data")

inputDF.show(false)

//For the last column, To change the key as City not Data :

//Reshaping the Columns using pivot

val transformedDF = inputDF.groupBy("Name", "Age", "City").pivot("city").agg(first("Data"))

transformedDF.show(false)

//Now make it by using toJson and collect it.

//Converting into Json as expected output and collect it to print.

val jsonArray = transformedDF.toJSON.collect

jsonArray.foreach(println)

}

Output :

I Hope, This Post was helpful, please do like, comment and share.

Thank You !

Comments

Post a Comment